If you are searching about Understand Entropy, Cross Entropy and KL Divergence in Deep Learning you've came to the right web. We have 9 Pictures about Understand Entropy, Cross Entropy and KL Divergence in Deep Learning like Nothing but NumPy: Understanding & Creating Binary Classification, Understand Entropy, Cross Entropy and KL Divergence in Deep Learning and also Using LeNet5 CNN architecture for MNIST classification problem on. Here it is:

Understand Entropy, Cross Entropy And KL Divergence In Deep Learning

divergence

GitHub - Nh9k/pytorch-implementation: Pytorch Implementation(LeNet

pytorch implementation normalization

Calculate Log-Cosh Loss Using TensorFlow 2 | Lindevs

cosh tensorflow allows

Loss Function In Machine Learning Tensorflow - MACHQI

accurate tensorflow

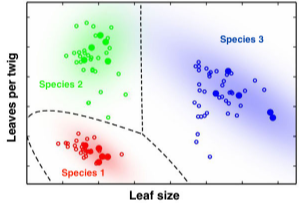

Nothing But NumPy: Understanding & Creating Binary Classification

binary unstable

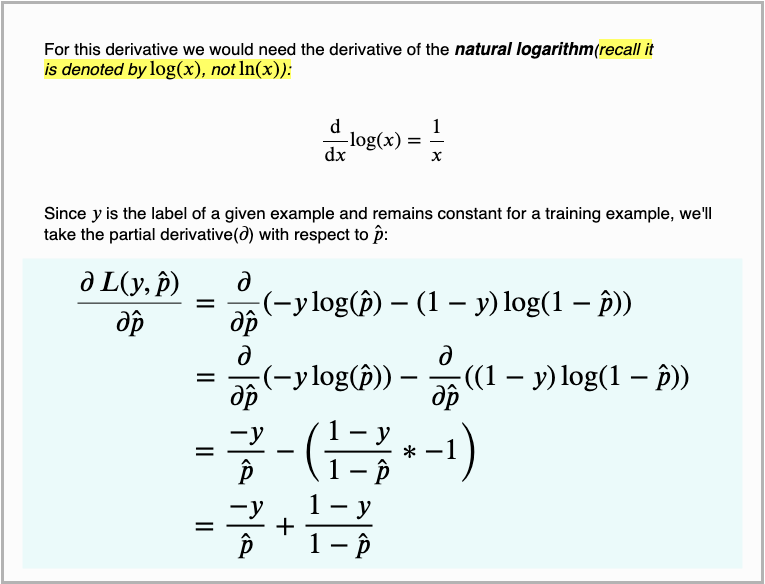

Nothing But NumPy: Understanding & Creating Binary Classification

derivative descent

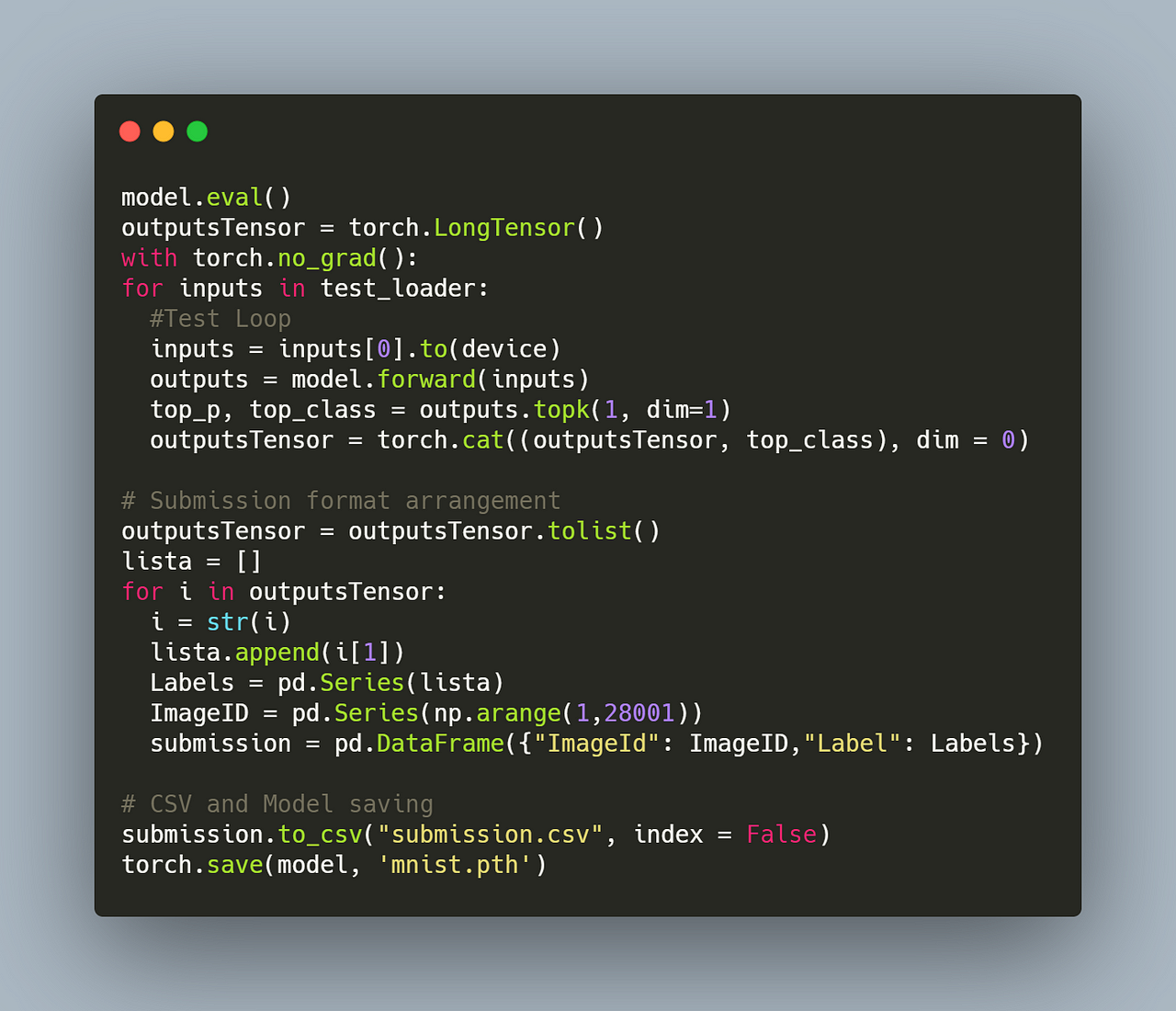

Using LeNet5 CNN Architecture For MNIST Classification Problem On

classification kaggle mnist cnn csv

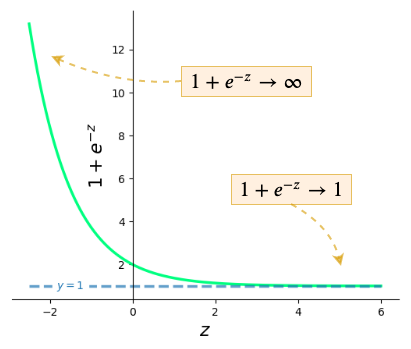

1-06. Cross Entropy

GitHub - Murtazakhan28/MNIST-dataset-classification-using-neural

mnist classification network using python neural dataset numpy acknowledgement

Mnist classification network using python neural dataset numpy acknowledgement. Derivative descent. Understand entropy, cross entropy and kl divergence in deep learning